Category

Explore by categories

Genie 3

Genie 3, developed by Google DeepMind, is the third-generation world model capable of generating diverse virtual worlds in real-time based on text prompts.

GPT-OSS

GPT-OSS is an open-source language model released by OpenAI, leveraging cutting-edge pretraining and post-training techniques. It places special emphasis on reasoning capabilities, efficiency, and practical deployment across diverse environments.

HunyuanWorld-1.0

HunyuanWorld-1.0 is an open-source 3D world generation model released by Tencent, featuring significant innovation and practicality.

Mureka V7

Mureka V7 is an advanced AI music generation model released by Kunlun Wanwei, designed to provide users with a more expressive and emotionally engaging music creation experience.

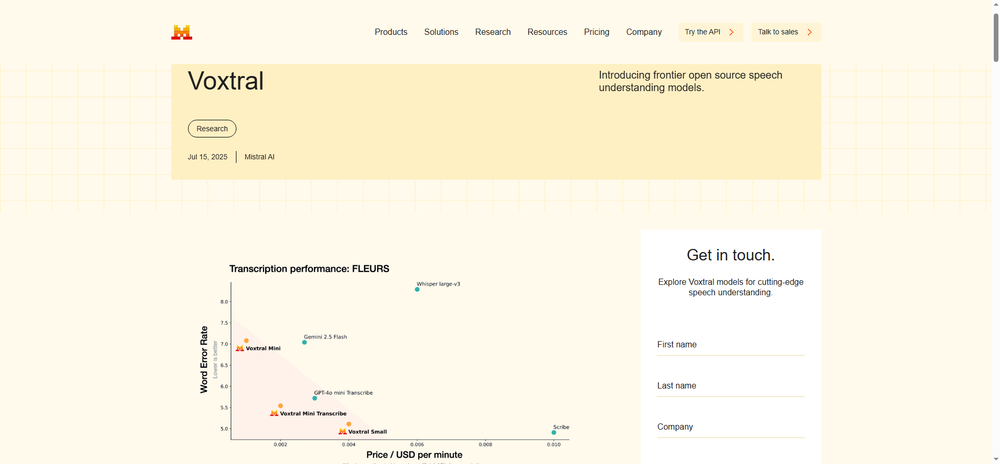

Voxtral

Voxtral is an open-source speech recognition model developed by Mistral, designed to provide efficient speech understanding and transcription services.

Kimi K2

Kimi K2 is a language model developed by Moonshot AI, utilizing a Mixture-of-Experts (MoE) architecture.

Grok 4

Grok 4 is the latest artificial intelligence model launched by xAI, featuring multiple notable characteristics aimed at enhancing reasoning capabilities and user experience.

Marey

Marey is an AI video generation model developed by Los Angeles-based startup Moonvalley, aimed at providing creators with more refined animation control options.

MuseSteamer

MuseSteamer is a multimodal AI video generation model launched by Baidu, designed to provide high-quality video production solutions for enterprises and content creators.