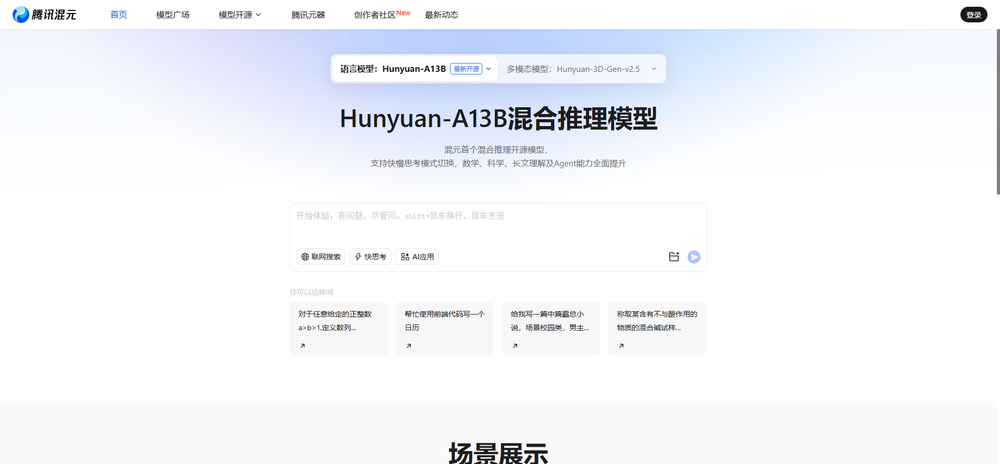

Hunyuan-A13B is an open-source large language model developed by Tencent based on a Mixture-of-Experts (MoE) architecture.

Key Features

Efficient Parameter Activation: Hunyuan-A13B has a total of 80 billion parameters, but only 13 billion are activated during inference. This design enables more efficient use of computational resources while maintaining strong performance.

Hybrid Inference Modes: The model supports two inference modes—"Fast Thinking" and "Slow Thinking." Fast Thinking is suitable for tasks requiring quick responses, while Slow Thinking is designed for complex logical reasoning and deep analysis, allowing users to flexibly choose the mode based on task needs.

Extended Context Support: Hunyuan-A13B natively supports a context window of up to 256K tokens, making it highly effective for long-text tasks while maintaining stable performance.

Enhanced Agent Capabilities: The model is optimized for tool usage in agent-based tasks and performs well on various benchmarks, especially in executing complex instructions and tool integration.

Quantized Versions: Tencent has also released quantized versions of Hunyuan-A13B, including FP8 and Int4 formats. These significantly reduce storage requirements while maintaining performance close to the original model, making it suitable for deployment on mid- to low-end devices.

Application Scenarios

Agent Development: Hunyuan-A13B can perform complex tasks through function calls, such as weather queries and data analysis. This makes it highly effective in agent applications by generating complex instruction responses efficiently.

Financial Analysis: With support for up to 256K context, the model is well-suited for analyzing complete financial reports, aiding the finance industry in in-depth analysis and decision-making.

Educational Tutoring: In the education sector, Hunyuan-A13B can perform step-by-step reasoning for math and science problems, offering detailed solutions and guidance to improve learning outcomes.

Code Assistant: The model supports full-stack development, including code generation, debugging, and optimization, making it useful in software development and tech support scenarios.

Scientific Research Acceleration: In research, Hunyuan-A13B can assist with literature reviews, hypothesis generation, and other tasks, helping researchers quickly access information and inspiration.

Long-Form Understanding and Generation: The model excels at understanding and generating long-form texts, suitable for contract review, academic material summarization, and other information-heavy tasks.

Natural Language Processing: Hunyuan-A13B delivers strong performance in text generation and question answering, providing accurate information and support across various NLP applications.

Low-Resource Deployment: Thanks to its efficient architecture, Hunyuan-A13B can run on mid- to low-end GPUs, lowering the technical barrier and making it accessible to individual developers and small to medium-sized enterprises.