GPT-OSS is an open-source language model released by OpenAI, leveraging cutting-edge pretraining and post-training techniques. It places special emphasis on reasoning capabilities, efficiency, and practical deployment across diverse environments.

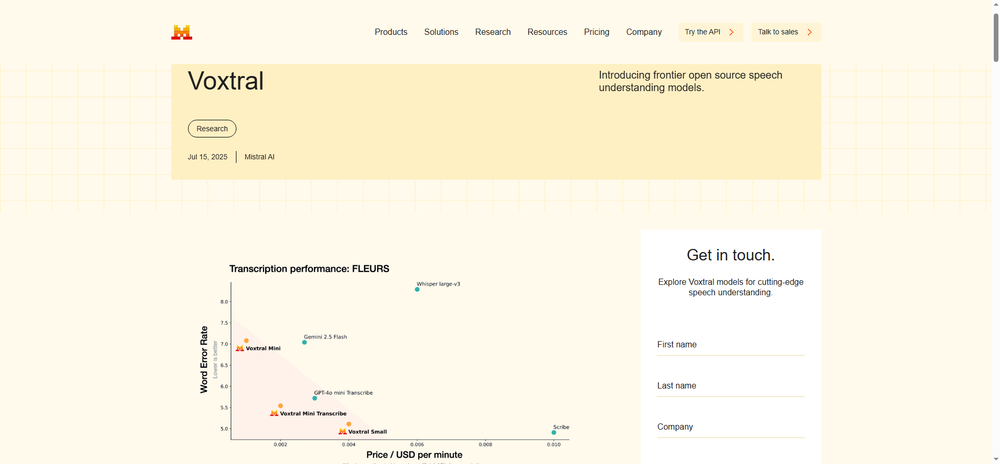

Voxtral is an open-source speech recognition model developed by Mistral, designed to provide efficient speech understanding and transcription services.

Kimi K2 is a language model developed by Moonshot AI, utilizing a Mixture-of-Experts (MoE) architecture.

Hunyuan-A13B is an open-source large language model developed by Tencent based on a Mixture-of-Experts (MoE) architecture.

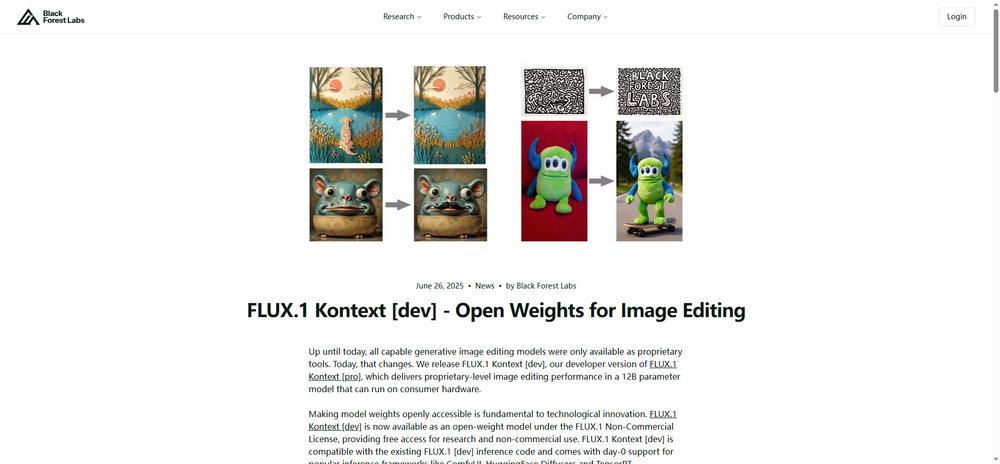

FLUX.1 Kontext [dev] is an advanced image editing model developed by Black Forest Labs, specializing in context-aware image generation and editing.

MiniMax-M1 is an open-source large-scale hybrid attention reasoning model based on a Mixture of Experts (MoE) architecture.

Magistral is the first reasoning model released by Mistral, designed to meet the demands of complex tasks.

Gemma 3n is a multimodal generative AI model launched by Google, specifically designed for efficient operation on mobile devices.

Wan2.1 VACE is an all-in-one video generation and editing model open-sourced by Alibaba, designed to provide users with an integrated video creation solution.